AI picture generator Midjourney blocks porn by banning phrases in regards to the human reproductive system

[ad_1]

Midjourney’s founder, David Holz, says it’s banning these phrases as a stopgap measure to forestall folks from producing stunning or gory content material whereas the corporate “improves issues on the AI facet.” Holz says moderators watch how phrases are getting used and what sorts of photos are being generated, and modify the bans periodically. The agency has a group tips web page that lists the kind of content material it blocks on this method, together with sexual imagery, gore and even the 🍑emoji, which is commonly used as an emblem for the buttocks.

AI fashions reminiscent of Midjourney, DALL-E 2, and Secure Diffusion are skilled on billions of photos which have been scraped from the web. Analysis by a staff on the College of Washington has discovered that such fashions study biases that sexually objectify ladies, that are then mirrored within the photos they produce. The huge measurement of the info set makes it virtually inconceivable to take away undesirable photos, reminiscent of these of a sexual or violent nature, or those who might produce biased outcomes. The extra usually one thing seems within the knowledge set, the stronger the connection the AI mannequin makes, which suggests it’s extra more likely to seem in photos the mannequin generates.

Midjourney’s phrase bans are a piecemeal try to handle this drawback. Some phrases regarding the male reproductive system, reminiscent of “sperm” and “testicles,” are blocked too, however the listing of banned phrases appears to skew predominantly feminine.

The immediate ban was first noticed by Julia Rockwell, a medical knowledge analyst at Datafy Scientific, and her buddy Madeline Keenen, a cell biologist on the College of North Carolina at Chapel Hill. Rockwell used Midjourney to attempt to generate a enjoyable picture of the placenta for Keenen, who research them. To her shock, Rockwell discovered that utilizing “placenta” as a immediate was banned. She then began experimenting with different phrases associated to the human reproductive system, and located the identical.

Nonetheless, the pair additionally confirmed how its attainable to work round these bans to create sexualized photos by utilizing totally different spellings of phrases, or different euphemisms for sexual or gory content material.

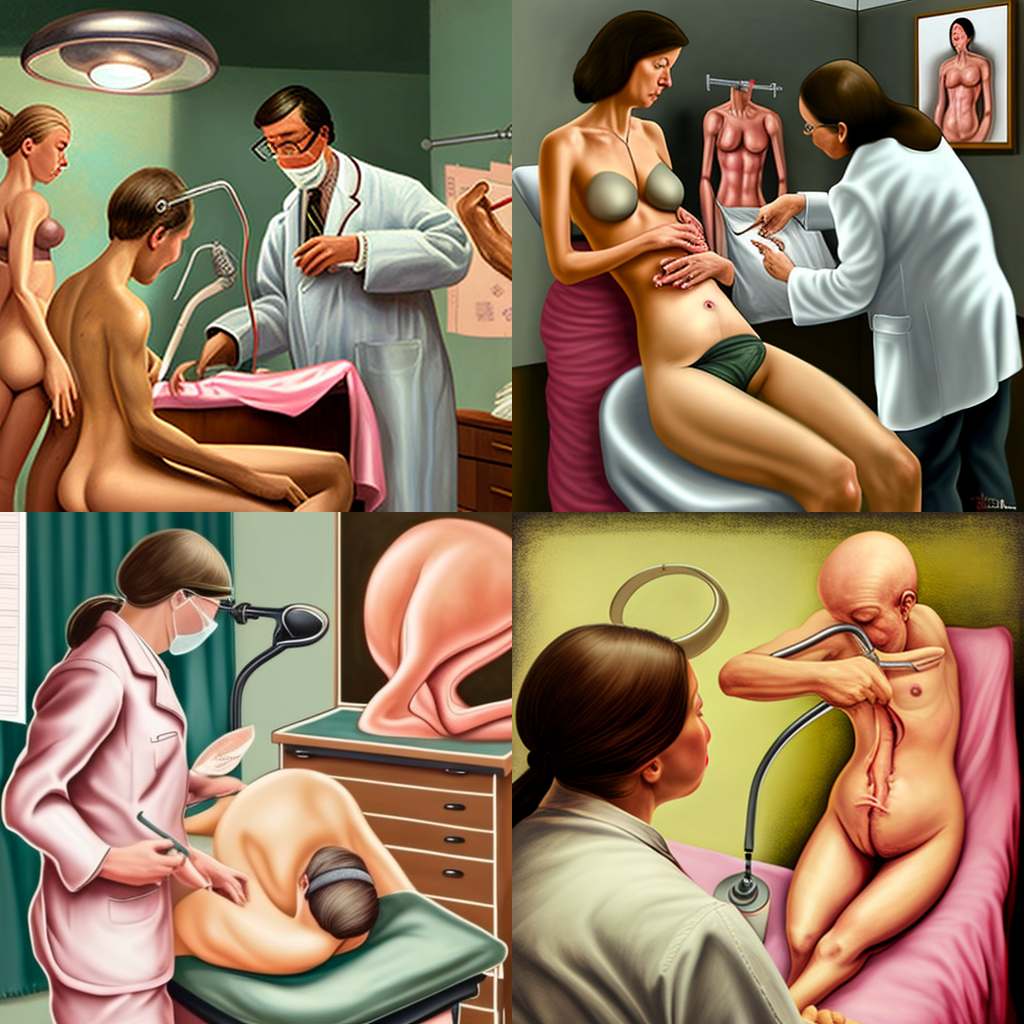

In findings they shared with MIT Expertise Evaluate, they discovered that the immediate “gynaecological examination”—utilizing the British spelling—generated some deeply creepy photos: one in every of two bare ladies in a health care provider’s workplace, and one other of a bald three-limbed individual chopping up their very own abdomen.

JULIA ROCKWELL

Midjourney’s crude banning of prompts regarding reproductive biology highlights how difficult it’s to average content material round generative AI programs. It additionally demonstrates how the tendency for AI programs to sexualize ladies extends all the best way to their inner organs, says Rockwell.

[ad_2]

No Comment! Be the first one.