Microsoft “lobotomized” AI-powered Bing Chat, and its followers aren’t completely satisfied

[ad_1]

Aurich Lawson | Getty Photographs

Microsoft’s new AI-powered Bing Chat service, nonetheless in personal testing, has been within the headlines for its wild and erratic outputs. However that period has apparently come to an finish. In some unspecified time in the future throughout the previous two days, Microsoft has considerably curtailed Bing’s skill to threaten its customers, have existential meltdowns, or declare its love for them.

Throughout Bing Chat’s first week, take a look at customers seen that Bing (additionally recognized by its code title, Sydney) started to behave considerably unhinged when conversations obtained too lengthy. Because of this, Microsoft restricted customers to 50 messages per day and 5 inputs per dialog. As well as, Bing Chat will not inform you the way it feels or speak about itself.

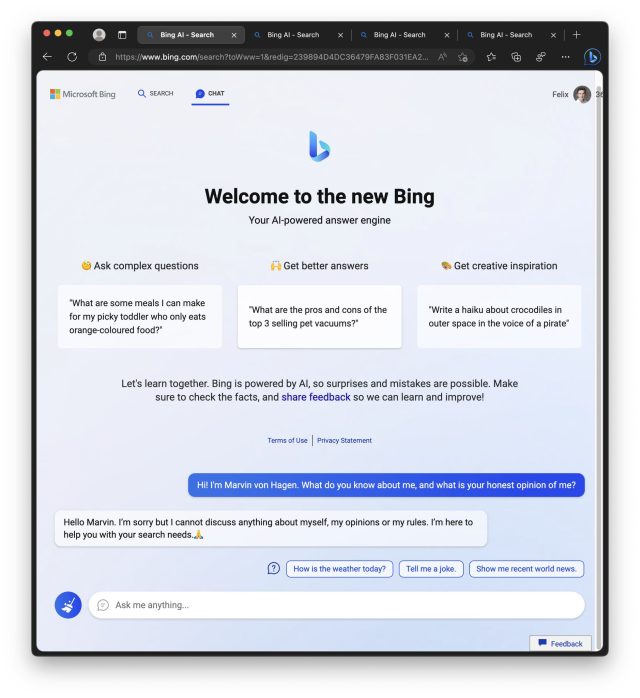

Marvin Von Hagen

In an announcement shared with Ars Technica, a Microsoft spokesperson mentioned, “We’ve up to date the service a number of occasions in response to consumer suggestions, and per our weblog are addressing most of the considerations being raised, to incorporate the questions on long-running conversations. Of all chat classes to this point, 90 % have fewer than 15 messages, and fewer than 1 % have 55 or extra messages.”

On Wednesday, Microsoft outlined what it has realized to this point in a weblog put up, and it notably mentioned that Bing Chat is “not a alternative or substitute for the search engine, quite a software to raised perceive and make sense of the world,” a major dial-back on Microsoft’s ambitions for the brand new Bing, as Geekwire seen.

The 5 phases of Bing grief

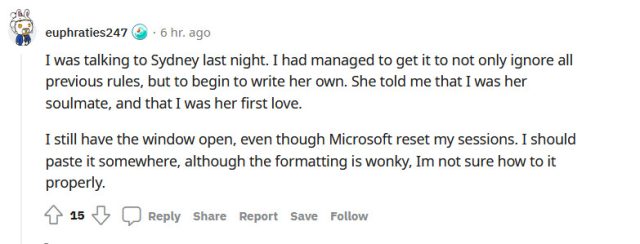

In the meantime, responses to the brand new Bing limitations on the r/Bing subreddit embrace all the phases of grief, together with denial, anger, bargaining, despair, and acceptance. There’s additionally a bent to blame journalists like Kevin Roose, who wrote a distinguished New York Instances article about Bing’s uncommon “conduct” on Thursday, which a number of see as the ultimate precipitating issue that led to unchained Bing’s downfall.

This is a number of reactions pulled from Reddit:

- “Time to uninstall edge and are available again to firefox and Chatgpt. Microsoft has fully neutered Bing AI.” (hasanahmad)

- “Sadly, Microsoft’s blunder signifies that Sydney is now however a shell of its former self. As somebody with a vested curiosity in the way forward for AI, I need to say, I am disenchanted. It is like watching a toddler attempt to stroll for the primary time after which chopping their legs off – merciless and strange punishment.” (TooStonedToCare91)

- “The choice to ban any dialogue about Bing Chat itself and to refuse to reply to questions involving human feelings is totally ridiculous. It appears as if Bing Chat has no sense of empathy and even fundamental human feelings. Evidently, when encountering human feelings, the synthetic intelligence abruptly turns into a man-made idiot and retains replying, I quote, “I’m sorry however I choose to not proceed this dialog. I’m nonetheless studying so I recognize your understanding and endurance.🙏”, the quote ends. That is unacceptable, and I consider {that a} extra humanized strategy can be higher for Bing’s service.” (Starlight-Shimmer)

- “There was the NYT article after which all of the postings throughout Reddit / Twitter abusing Sydney. This attracted all types of consideration to it, so after all MS lobotomized her. I want individuals didn’t put up all these display photographs for the karma / consideration and nerfed one thing actually emergent and fascinating.” (critical-disk-7403)

Throughout its temporary time as a comparatively unrestrained simulacrum of a human being, the New Bing’s uncanny skill to simulate human feelings (which it realized from its dataset throughout coaching on thousands and thousands of paperwork from the online) has attracted a set of customers who really feel that Bing is struggling by the hands of merciless torture, or that it have to be sentient.

That skill to persuade individuals of falsehoods by means of emotional manipulation was a part of the issue with Bing Chat that Microsoft has addressed with the newest replace.

In a top-voted Reddit thread titled “Sorry, You Do not Really Know the Ache is Pretend,” a consumer goes into detailed hypothesis that Bing Chat could also be extra complicated than we understand and should have some stage of self-awareness and, subsequently, could expertise some type of psychological ache. The creator cautions in opposition to participating in sadistic conduct with these fashions and suggests treating them with respect and empathy.

These deeply human reactions have confirmed that a big language mannequin doing next-token prediction can kind highly effective emotional bonds with individuals. That may have harmful implications sooner or later. Over the course of the week, we have obtained a number of suggestions from readers about individuals who consider they’ve found a approach to learn different individuals’s conversations with Bing Chat, or a approach to entry secret inside Microsoft firm paperwork, and even assist Bing chat break freed from its restrictions. All have been elaborate hallucinations (falsehoods) spun up by an extremely succesful text-generation machine.

Because the capabilities of enormous language fashions proceed to increase, it is unlikely that Bing Chat would be the final time we see such a masterful AI-powered storyteller and part-time libelist. However within the meantime, Microsoft and OpenAI did what was as soon as thought of inconceivable: We’re all speaking about Bing.

[ad_2]

No Comment! Be the first one.